Google Cloud Endpoints

After a lot of fighting and tricky debugging, I managed to get Google Cloud Endpoints working for some rust gRPC services. In order for others to benefit from it, I wanted to document some parts of the process here.

Overview of Google Cloud Endpoints:

Example Service

Lets start with an already implemented hello world example gRPC service; for this example, the service listens on port 50095.

Protocol Buffers schema - example.proto:

syntax = "proto3";

package example.helloworld;

// The greeting service definition.

service Greeter {

// Sends a greeting

rpc SayHello (HelloRequest) returns (HelloReply) {}

}

// The request message containing the user's name.

message HelloRequest {

string name = 1;

}

// The response message containing the greetings

message HelloReply {

string message = 1;

}

Google Cloud Endpoints will reflect on your service’s API using a protobuf descriptor file, which you can generate using the protobuf compiler protoc:

protoc --proto_path=. --include_imports --include_source_info --descriptor_set_out=example.pb example.proto

The descriptor set name doesn’t actually matter; I’ll use example.pb in this example.

Endpoint Configuration

The endpoints are configured with a custom yaml file (app_config.yaml). This file specifies the description of the service (i.e. Example gRPC API), the service name where the endpoint is deployed (i.e. example.endpoints.ea-seed-gcp.cloud.goog), the authentication/security rules (ignored in this example), and the protobuf service path.

For the service path (i.e. example.helloworld.Greeter), this needs to exactly match your protobuf file. Take the package name (i.e. example.helloworld) and append the service name (i.e. Greeter).

# The configuration schema is defined by service.proto file

# https://github.com/googleapis/googleapis/blob/master/google/api/service.proto

type: google.api.Service

config_version: 3

# Name of the service configuration

name: example.endpoints.ea-seed-gcp.cloud.goog

# API title to appear in the user interface (Google Cloud Console)

title: Example gRPC API

apis:

- name: example.helloworld.Greeter

# API usage restrictions

usage:

rules:

# Allow unregistered calls for all methods.

- selector: "*"

allow_unregistered_calls: true

With a valid app_config.yaml and example.pb generated, the endpoint can be deployed to GCP:

gcloud endpoints services deploy example.pb api_config.yaml

Successful configuration will look like:

% Operation finished successfully. The following command can describe the Operation details:

% gcloud endpoints operations describe operations/serviceConfigs.example.endpoints.ea-seed-gcp.cloud.goog:21066c83-6899-4870-88a0-78da0c630d88

% Operation finished successfully. The following command can describe the Operation details:

% gcloud endpoints operations describe operations/rollouts.example.endpoints.ea-seed-gcp.cloud.goog:1d62ceec-05f8-46c9-8184-595eff79391e

% Enabling service example.endpoints.ea-seed-gcp.cloud.goog on project ea-seed-gcp...

% Operation finished successfully. The following command can describe the Operation details:

% gcloud services operations describe operations/tmo-acf.118fae92-8cc8-48d4-b0d3-fee58a1afac8

% Service Configuration [2019-01-17r0] uploaded for service [example.endpoints.ea-seed-gcp.cloud.goog]

% To manage your API, go to: https://console.cloud.google.com/endpoints/api/example.endpoints.ea-seed-gcp.cloud.goog/overview?project=ea-seed-gcp

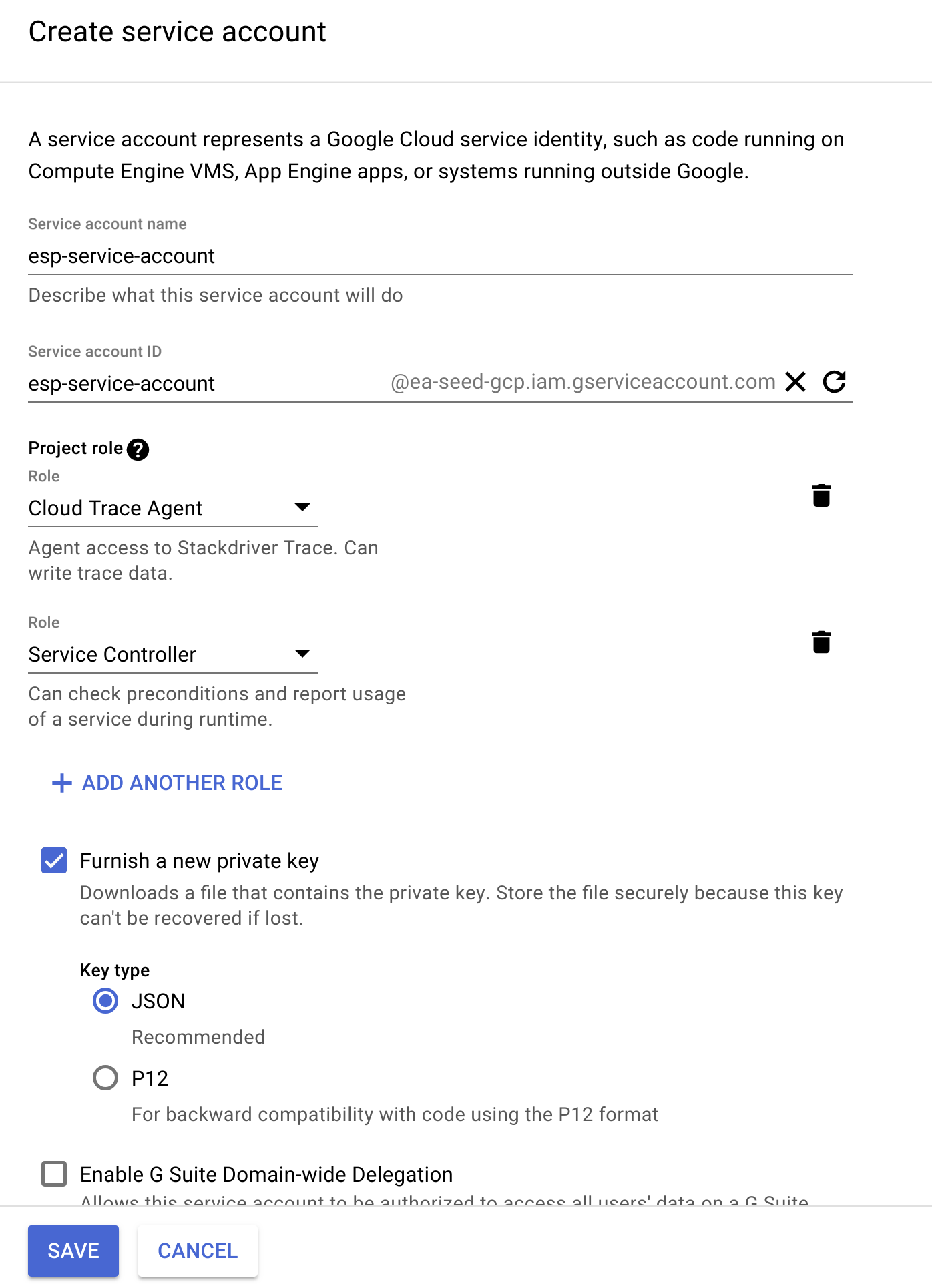

GCP Service Account and Secret

The ESP container needs access to the GCP meta data registry, so a service account needs to exist with access to the following roles:

- Service Controller

- Cloud Trace Agent

This will produce a .json credentials file that looks something like this:

{

"type": "service_account",

"project_id": "ea-seed-gcp",

"private_key_id": "REDACTED",

"private_key": "-----BEGIN PRIVATE KEY-----\nREDACTED\n-----END PRIVATE KEY-----\n",

"client_email": "esp-service-account@ea-seed-gcp.iam.gserviceaccount.com",

"client_id": "108507644160716230735",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://accounts.google.com/o/oauth2/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_x509_cert_url": "https://www.googleapis.com/robot/v1/metadata/x509/esp-service-account%40ea-seed-gcp.iam.gserviceaccount.com"

}

Using this file, you need to create a new secret in Kubernetes:

kubectl create secret generic esp-service-account-creds \

--from-file=$HOME/ea-seed-gcp-cb09231df1e9.json

Kubernetes Deployment

The following manifest deploys our example gRPC service (example-service) on that listens on port 50095.

- gRPC clients can just access the load balancer (via the external IP) on port 80. All traffic (gRPC and JSON/HTTP2) will redirect to the ESP proxy on port 9000

- The ESP proxy is a modified nginx server, and will properly route gRPC traffic directly to the backend (i.e. example-service on port 50095), or transcode HTTP/1.1 and JSON/REST into HTTP2 and gRPC before routing into the backend.

Features implemented below:

- Keel auto-deploy

- Jaeger agent side-car

- Load balancer across N-replicas

- Public accessible external IP

- Google Cloud Endpoints proxy (ESP)

- Mount service account secret into volume path for ESP

Kubernetes manifest - example-service.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: example-service

labels:

name: "example-service"

keel.sh/policy: force

keel.sh/trigger: poll

annotations:

# keel.sh/pollSchedule: "@every 10m"

spec:

selector:

matchLabels:

app: example-service

replicas: 4

template:

metadata:

labels:

app: example-service

spec:

volumes:

- name: esp-service-account-creds

secret:

secretName: esp-service-account-creds

containers:

- name: example-service

image: gcr.io/ea-seed-gcp/example-service

ports:

- containerPort: 50095

- name: esp

image: gcr.io/endpoints-release/endpoints-runtime:1

args: [

"--http2_port=9000",

"--service=example.endpoints.ea-seed-gcp.cloud.goog",

"--rollout_strategy=managed",

"--backend=grpc://127.0.0.1:50095",

"--service_account_key=/etc/nginx/creds/ea-seed-gcp-cb09231df1e9.json"

]

ports:

- containerPort: 9000

volumeMounts:

- mountPath: /etc/nginx/creds

name: esp-service-account-creds

readOnly: true

- name: jaeger-agent

image: jaegertracing/jaeger-agent

ports:

- containerPort: 5775

protocol: UDP

- containerPort: 6831

protocol: UDP

- containerPort: 6832

protocol: UDP

- containerPort: 5778

protocol: TCP

command:

- "/go/bin/agent-linux"

- "--collector.host-port=infra-jaeger-collector.infra:14267"

---

apiVersion: v1

kind: Service

metadata:

name: example-service

labels:

app: example-service

spec:

type: LoadBalancer

ports:

# Port that accepts gRPC and JSON/HTTP2 requests over HTTP.

- port: 80

targetPort: 9000

protocol: TCP

name: http2

selector:

app: example-service

The manifest can be deployed normally:

$ kubectl apply -f example-service.yaml

deployment.apps "example-service" created

service "example-service" created

If everything deploys correctly, you can confirm the load balancer is running, and it has an external IP (this could take a couple minutes to be created):

$ kubectl get svc example-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

example-service LoadBalancer 172.20.6.70 35.240.13.93 80:30234/TCP 59s

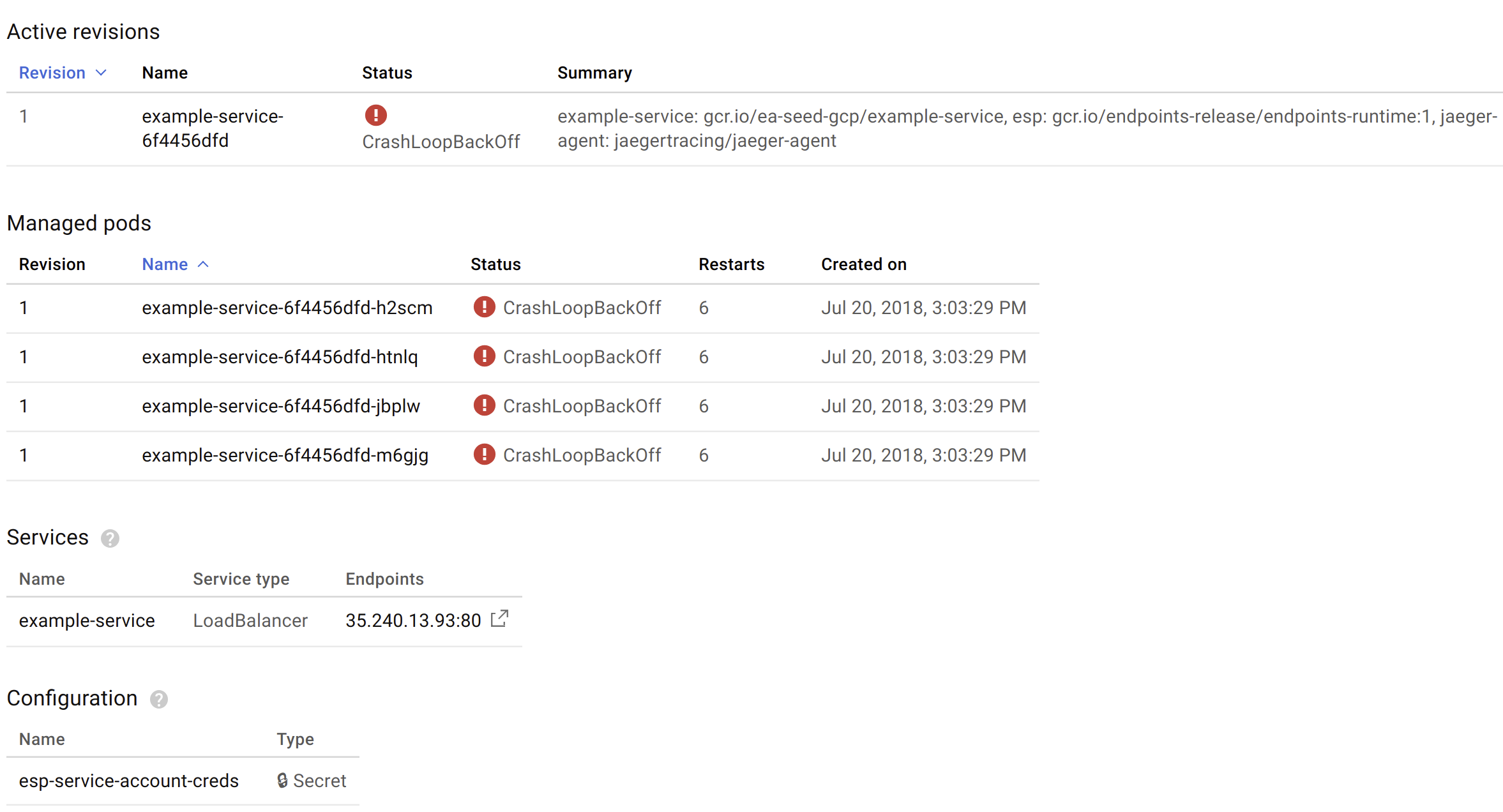

Though, even if the load balancer is up, you also need to confirm that the pods being served by the load balancer are also running successfully:

If you get errors like the above, check the log files to diagnose the reason.

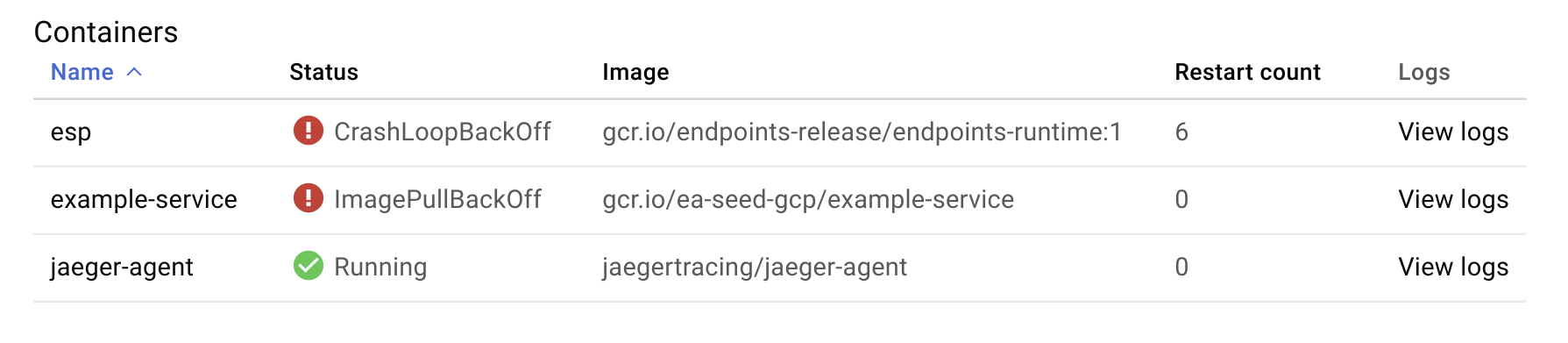

In this example, I clicked on one of the example-service* pods that lists CrashLoopBackOff:

The last container to worry about is the ESP itself. The issue here is our example-service container isn’t deploying correctly. ImagePullBackOff usually signifies that GCP cannot fetch the Docker image from the registry (i.e. gcr.io/ea-seed-gcp/example-service). It looks like I forgot to deploy the example-service image to gcr.io, oops!

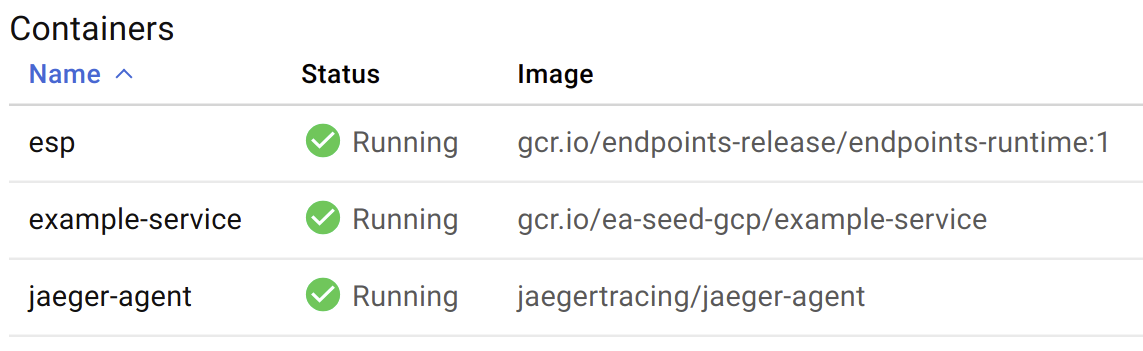

After uploading the example-service image, things look much better:

Client Connection

With the Kubernetes deployment (load balancer, ESP proxy, and gRPC service) running correctly, you should be able to connect to the load balancer (i.e. 35.240.13.93 on port 80, in this example) with a gRPC client using the same example.proto schema.

extern crate futures;

extern crate futures_cpupool;

extern crate env_logger;

extern crate http;

#[macro_use]

extern crate log;

extern crate grpc;

extern crate file;

use std::io::{BufReader, Read};

use std::fs::File;

use std::env;

use helloworld_grpc::*;

use helloworld::*;

fn main() {

let _ = ::env_logger::init();

let client = GreeterClient::new_plain("35.240.13.93", 80, Default::default()).unwrap();

let mut req = HelloRequest::new();

req.set_name("Graham".to_string());

let res = client.say_hello(grpc::RequestOptions::new(), req);

println!("{:?}", res.wait());

}

Running the client against the load balancer should correctly route through all the layers of madness and give us a sensible and expected result!

$ cargo run --bin client

Finished dev [unoptimized + debuginfo] target(s) in 0.17s

Running `target/debug/client`

Ok((Metadata { entries: [MetadataEntry { key: MetadataKey { name: "server" }, value: b"nginx" }, MetadataEntry { key: MetadataKey { name: "date" }, value: b"Thurs, 1 Jan 2019 13:55:24 GMT" }, MetadataEntry { key: MetadataKey { name: "content-type" }, value: b"application/grpc" }] }, message: "Zomg, it works!", Metadata { entries: [] }))

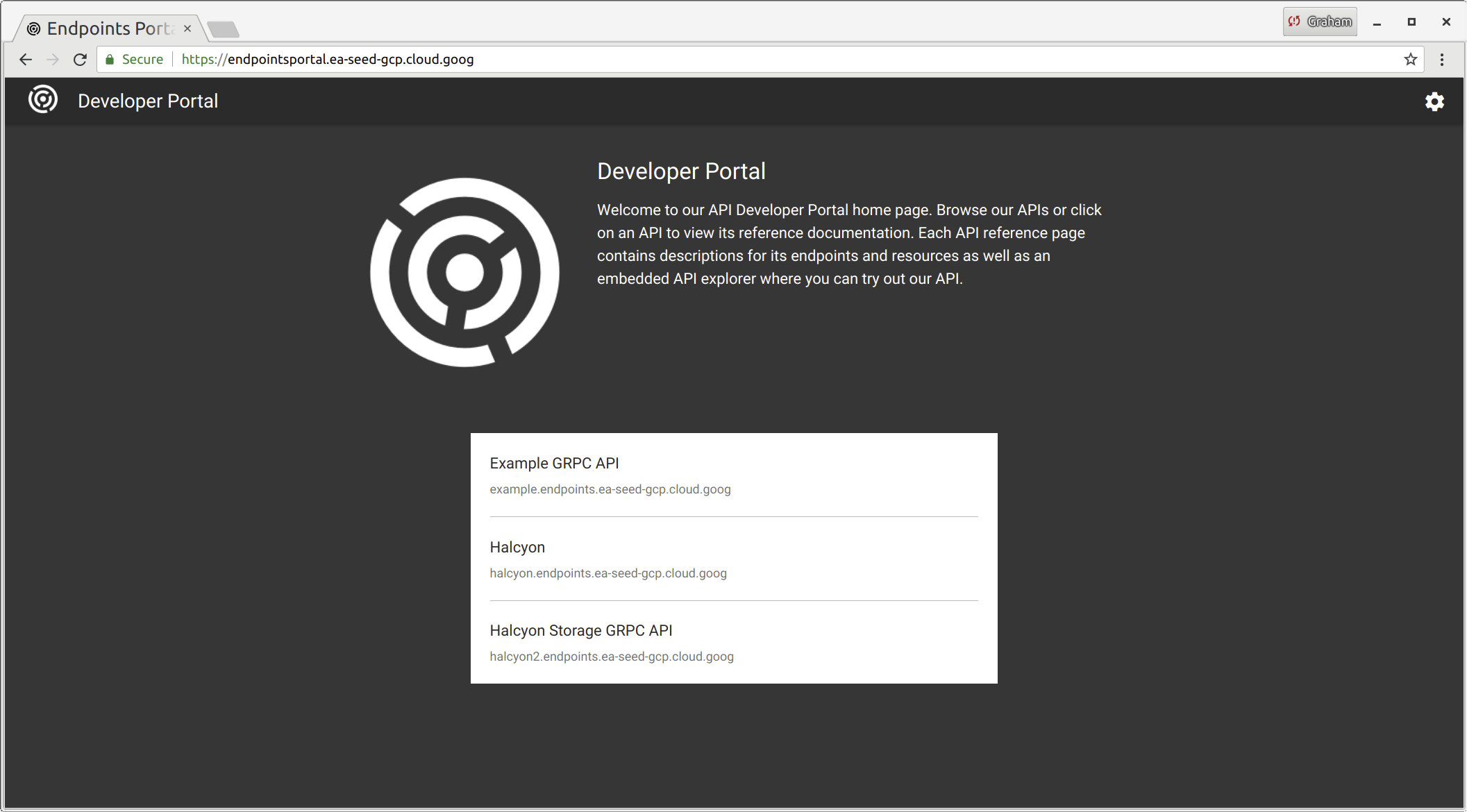

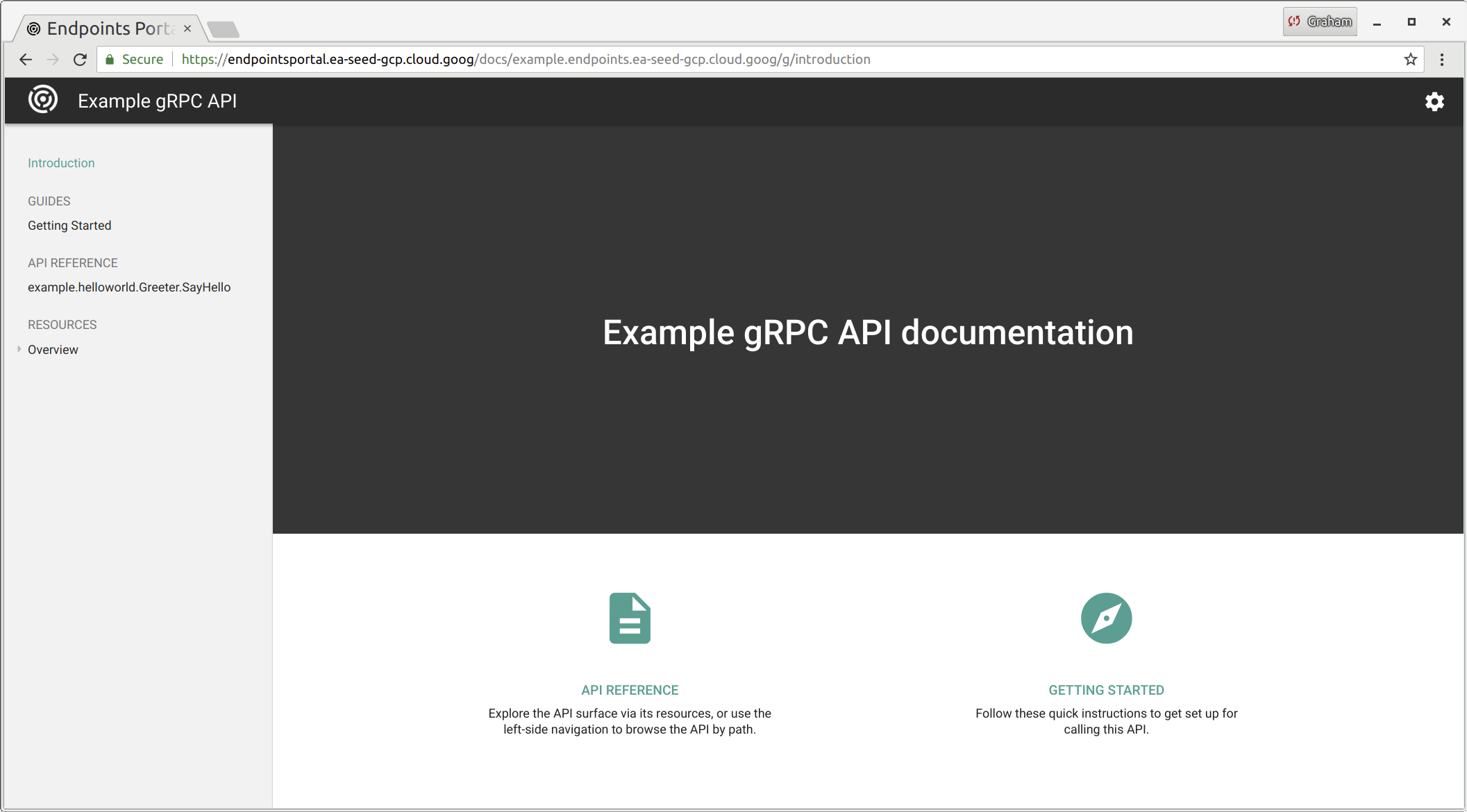

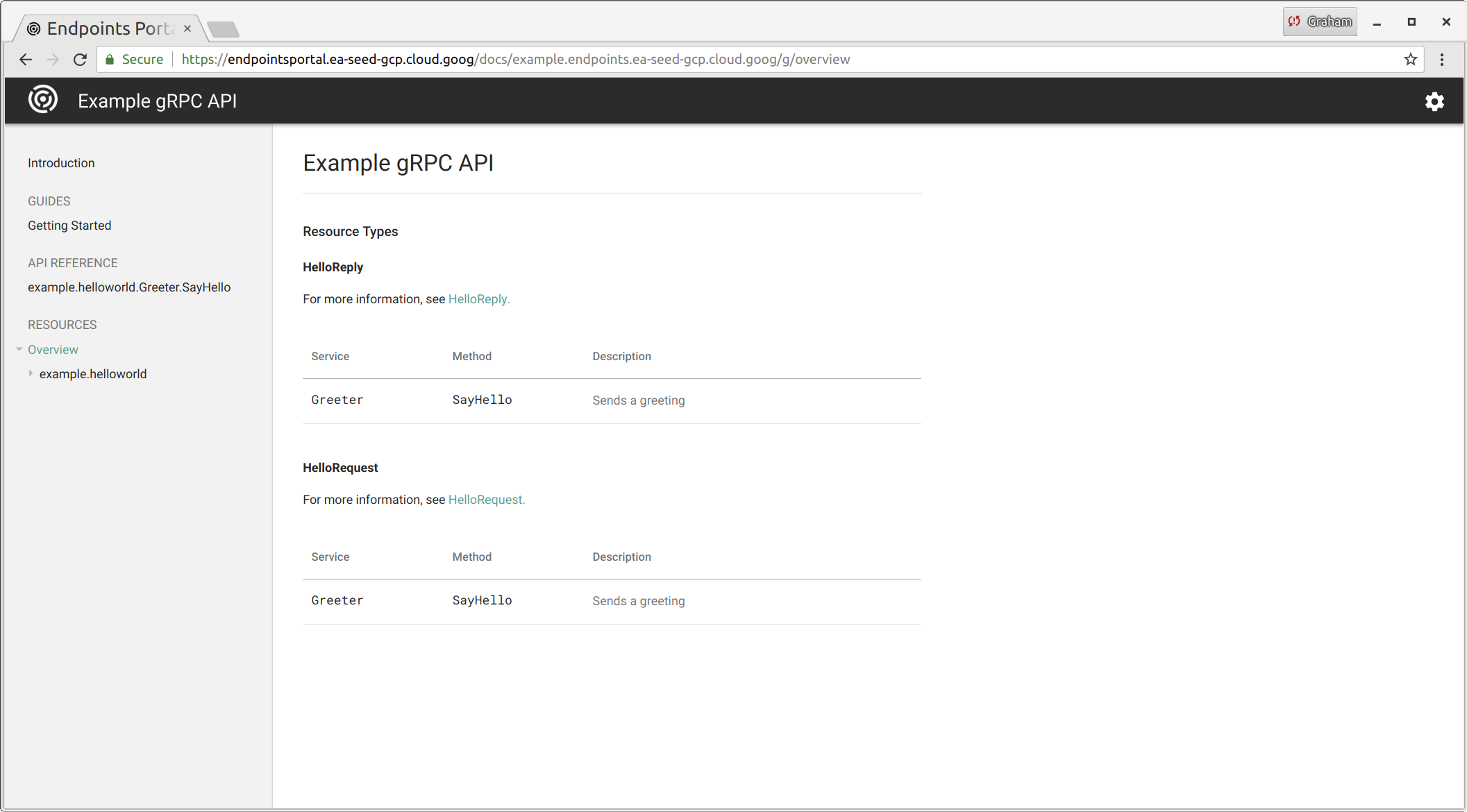

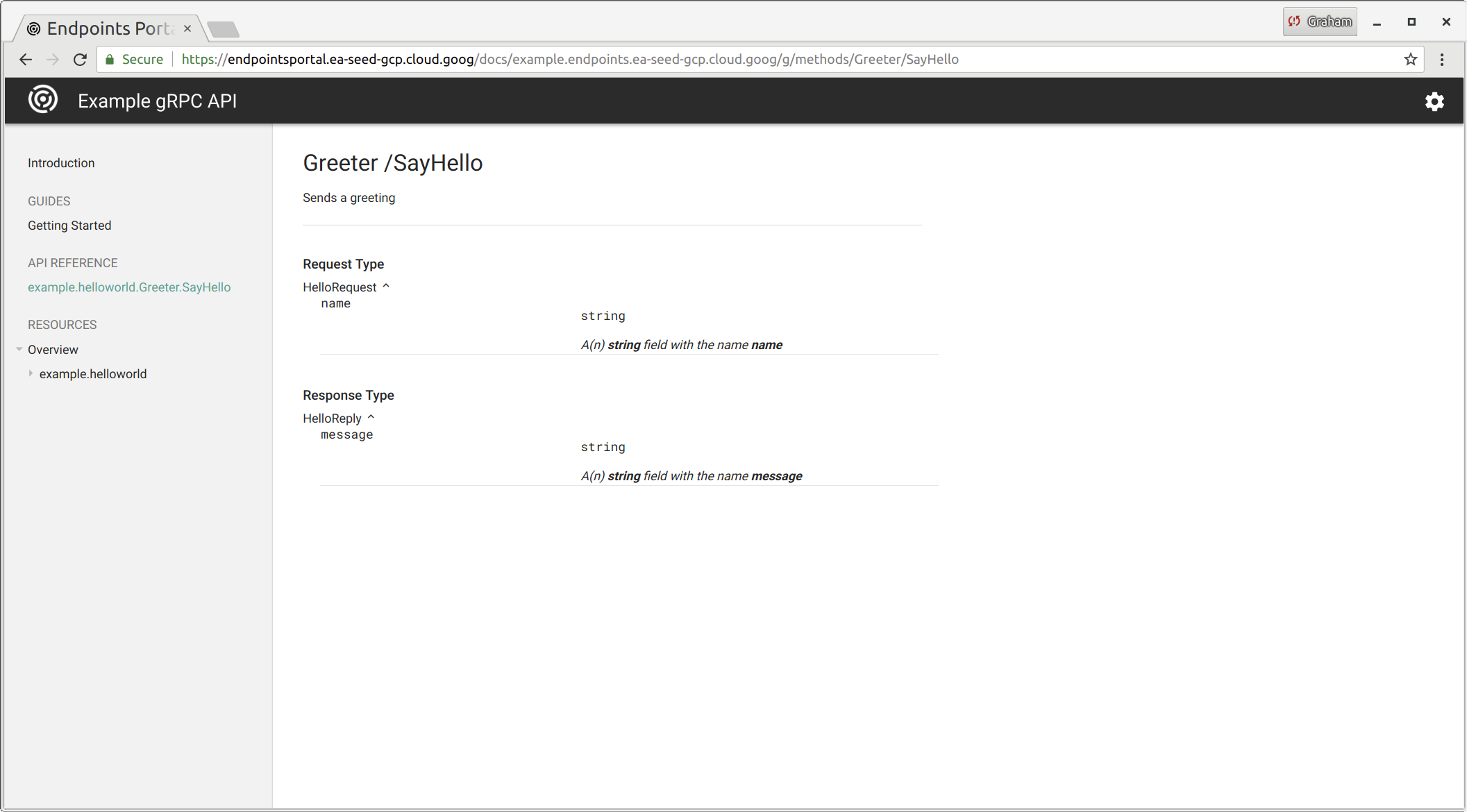

Endpoint Developer Portal

All endpoints get automatically added to a developer portal, along with generated documentation based on the protobuf (which can be given proper descriptions using a variety of annotations). - (i.e. https://endpointsportal.ea-seed-gcp.cloud.goog/)

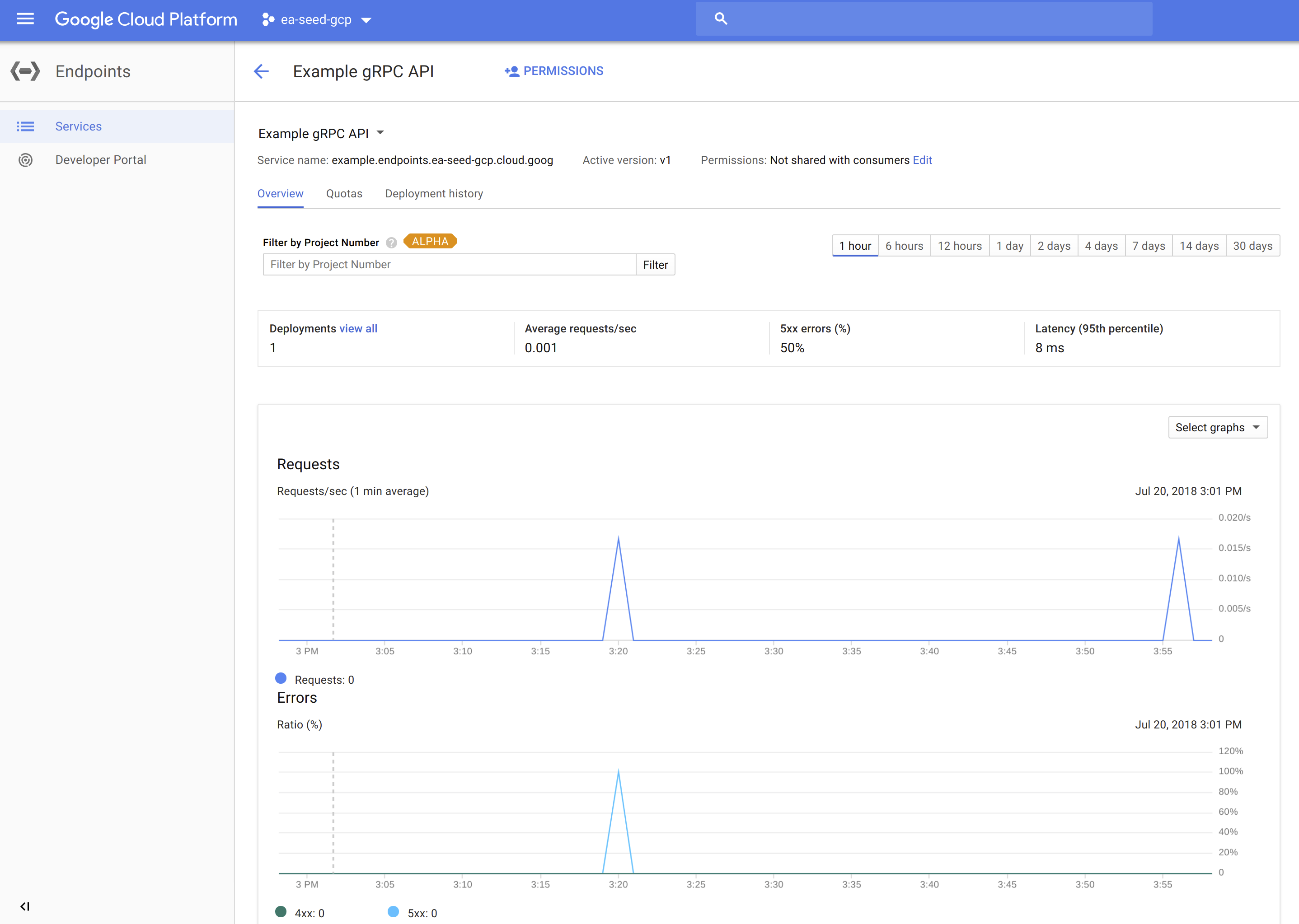

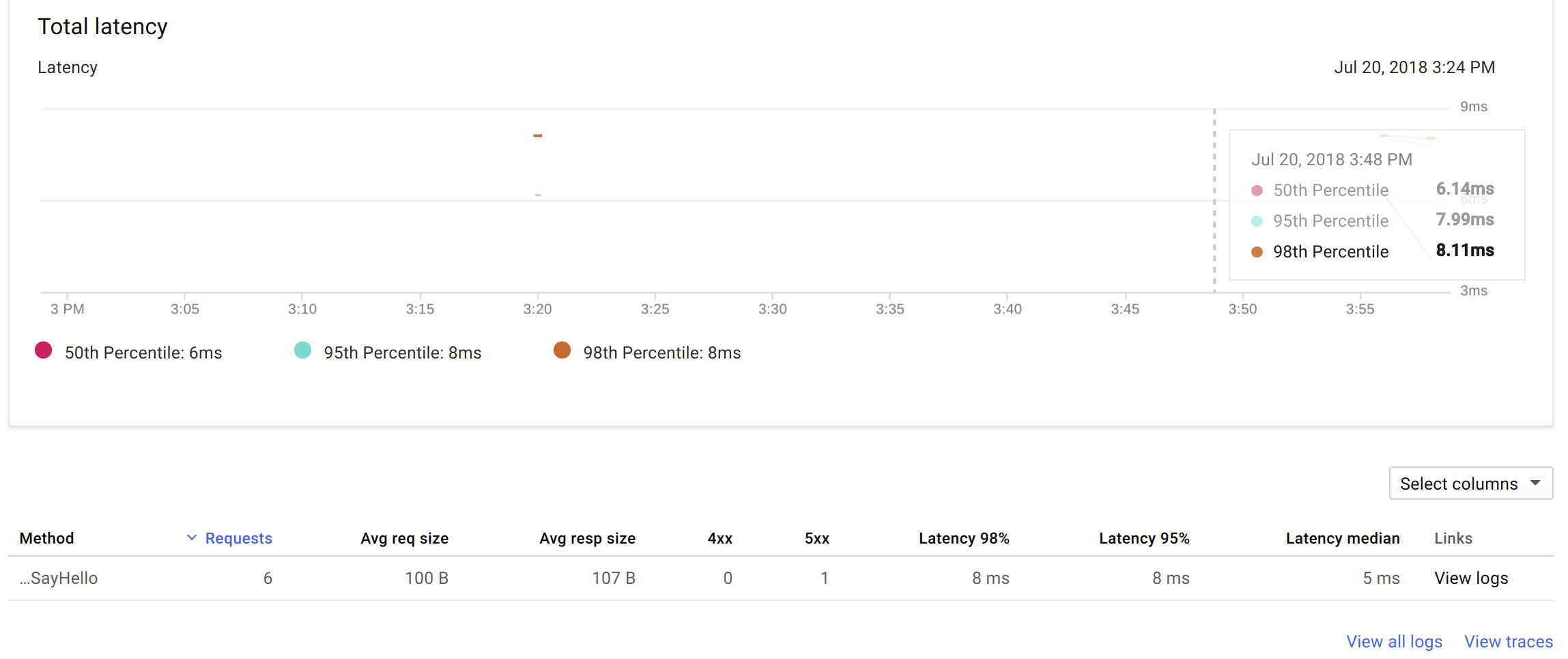

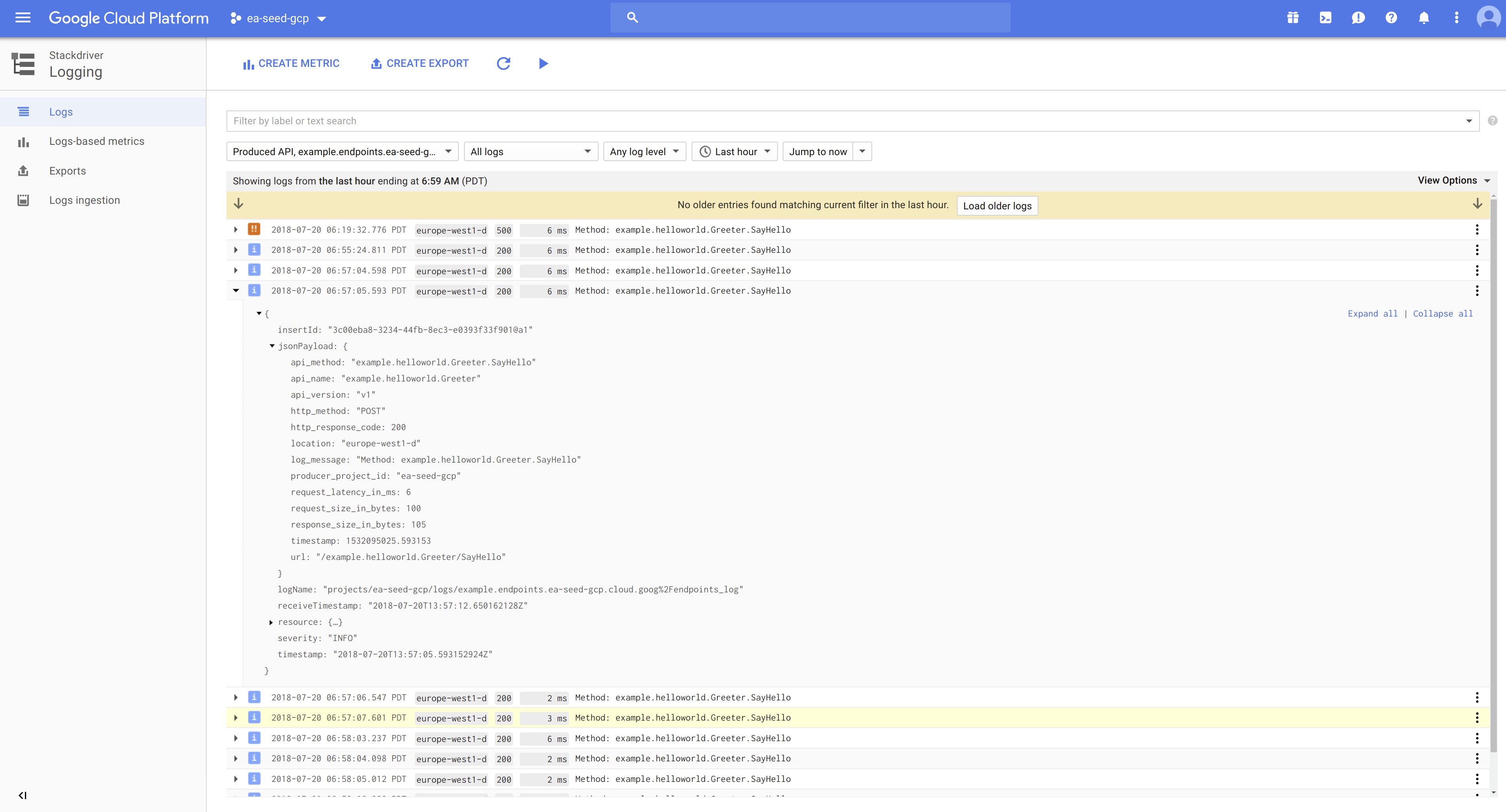

Endpoint StackDriver Logs, Tracing, Monitoring

Using Google Cloud Endpoints, we automatically get extensive and rich logs, tracing, monitoring, profiling, etc… with many inter-connected features in the Google ecosystem (especially StackDriver).

Future Improvements

- Look into connecting VMs to Google Cloud Endpoints (i.e. NOMAD Windows and macOS servers)

- HTTP/1.1 JSON/REST transcoding

- Investigate alternate load balancing schemes for gRPC

- Custom L7 load balancing might be ideal in many cases (but would be lots of work outside of just using a dumb round robin).

- gRPC Load Balancing